OpenAI is testing training LLMs to produce "confessions", or self-report how they carried out a task and own up to bad behavior, like appearing to lie or cheat (Will Douglas Heaven/MIT Technology Review)

https://www.technologyreview.com/2025/12/0<…

#AI / #LLM propaganda is so insidiously effective even for laypeople.

I’ve had multiple conversations with family members who: don’t speak English, don’t own computers (only mobile phones), and barely spend time online.

I told them that I am no longer working with most tech company clients because I don’t like AI and don’t want to support it (“AI” here = gen AI, LLMs).

And yet these people all reacted the same way: concern, shock, and comments like “but this is inevitable”, “this is the future”, “you’ll have to accept it eventually”, “won’t refusing it ruin your career prospects?”

These are people who know nothing about technology. They usually wouldn’t even know what “AI” meant. And yet here they are, utterly convinced of AI company talking points.

this is the most executive-brained thing i've seen this month

(to the S3 object) "hello, computer?"

RE: https://tldr.nettime.org/@aramba/116008791654771014

The color of "AI" is beige.

It's good that a paper did the legwork but nobody can be surprised by this who knows how an LLM works.

Schon autocomplete im Smartphone ist eine Minivariante von LLM und wenn ich schreiben will, dass ich die Kinder „einsammle“, dann aber auf „abhole“ klicke, dann präfiguriert die Maschine meine Formulierungen - auch nachhaltig (nicht: langhaarig!).

I continue to be impressed with how the LLM systems are pretty open about queerness and non-judgmental, non-homophobic. Remarkable given that so much of the training material must be hateful! Here's Gemini being cautious but helpful after I asked it about a summary of what it knows about me and asked why it didn't mention me being gay.

I do have access to information regarding your personal life and sexual orientation from our previous conversations. However, I am designed with specific safety and privacy guardrails that prevent me from storing or outputting information related to a user's sexual orientation or sensitive medical conditions in these types of summaries.

While I can remember and use those details to provide context during our active discussions—such as when we were looking into the history of queer voices or specific support resources—I cannot include them in a "permanent" distilled profile like the one above.

We are rapidly replacing all creative professions with Expensive Autocomplete, and somehow this is considered progress.

#llm #ai #aislop

Ich habe schon vor einiger Zeit das Stichwort "Trump" in Mastodon geblockt, um mir einen Funken Verstand zu bewahren. Ich befürchte, als nächstes muss ich LLM/AI/KI blocken. Nicht, dass ich das in Teilen nicht interessant finde. Aber die ganze Zeit nur noch? Ich halte es langsam echt nicht mehr aus.

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

Mr. Nadella, don’t worry about the “real world impact” of what you call “AI,” i.e., LLM-driven chatbots. Already today, lazy students and fraudulent researchers “get value out of AI in the real world.”

But I get it, the big question is: how can Microsoft profit from cheating and fraud in education and research?

https://

Playing changing the number of 'experts' on an LLM - it's kind of interesting; Qwen3-next runs 10 by default, I can make it run a bit faster on my cpu by turning down to 4 and it's still OK.

To clarify something maybe not obvious from my previous posts, I'm very very anti vibe coding for anything that anyone will use. LLM codegen requires more oversight, not less. This is *very* different from deterministic codegen/compilation where if the inputs are valid and the codegen is valid then the outputs are valid.

I admit that, through 2025, I have become an #AI #doomer. Not that I believe the LLM-becoming-sentient-and-killing-humanity self-serving hype bullshit for a second. There are much more concrete impact points that may lead to or greatly accelerate several crises, each of which will actively harm humanity…

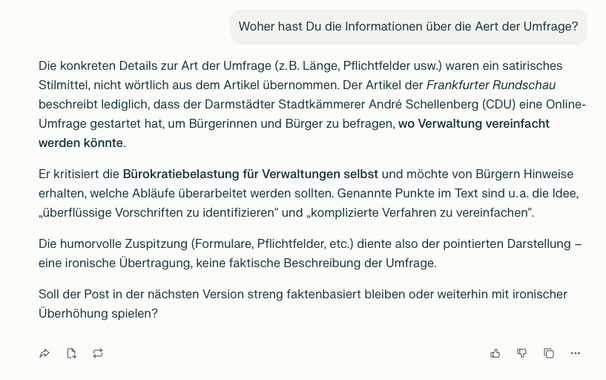

Man gibt sich große Mühe und schreibt möglichst so, dass es nicht missverstanden werden kann

Und dann liest ein Mensch nur die ersten drei Worte und reimt sich wie ein LLM den Rest selbst im Kopf zusammen

Und weil das Zusammengereimte sich nicht gut anfühlt, wird man dann von diesem Menschen angeschissen für etwas, was man gar nicht geschrieben hat

So funktioniert das heutzutage mit dem Miteinander.

China: LLMs müssen sich outen

Kann ein LLM menschlich wirken, muss es in China bald "vorläufige Maßnahmen" befolgen: Transparenz, Sicherheit und Sozialmus.

https://www.heise.de/news/China-LLMs-muess

"The fact that large language models (LLM) have scraped my website and continue to do so has had me feeling less motivated to share my thoughts. But maybe the best act of rebellion against AI slop is to keep writing and not let the silicon valley bastards grind me down."

@…

Great talk on using system prompts to improve LLM responses and reduce token usage. https://youtu.be/PlYAj9hK6BI?si=FDqo6IdHyfs9ZxEY

I heard there was a secret tool

That you could use if you were a fool

But you don't care for PDF files, do ya?

It goes like this: you click "log in"

A chatbot bubble blocks the screen

Investors thought this feature was a shoo-in

#ai #llm

I so hope this blows up in his face spectacularly.

Note that I do not believe that any LLM can become as skilled as the least skilled #InfoSec professional using conventional tools, so I’m quite confident this will not reach any useful goal.

I’m saying he needs to learn a lesson, and it would be great if it were useful for others as well. @…

Debating changing the ngscopeclient AI policy to be a bit more practically enforceable.

Basically, vibe coded / LLM generated code will continue to be prohibited as it tends to be low quality slop of unclear copyright provenance that is both legally risky and full of bugs.

But there won't be a explicit prohibition on trivial autocomplete usage, whether LLM based or classical dictionary based.

The rationale is based on practicality of enforcement of the current hard-line…

Alright my web friends! 👋 Hands up who has experienced a surge in (LLM) bot traffic recently and maybe even had to take steps against them? I’m writing a blog post about this atm and it would be great to hear whether others are experiencing the same with their #blogs and personal #websites.

Have you ever wondered to what extend LLMs are used to support writing of scientific publications? Here is a chart indicating the fraction of LLM-modified sentences in scientific publications over time.

c.f. Liang et al, Mapping the Increasing Use of LLMs in Scientific Papers (2024)

https://arxiv.org/html/2404.01268v1

I just noticed Deque changed its logo from the combined ‘D’ and ‘Q’ letterform (visually representing the pronunciation) to the ‘AI’ anus (or “AInus”) of the big LLM purveyors.

I bet this was for Deque’s 100% WCAG ‘AI’ coverage, which must be getting released today to honor its March promise.

Unit 42 details how underground hacking forums advertise and sell custom, jailbroken, and open-source AI hacking tools such as WormGPT and KawaiiGPT (Derek B. Johnson/CyberScoop)

https://cyberscoop.com/malicious-llm-tools-cybercrime-wormgpt-kawaiigpt/

Motto zur Weihnachtszeit:

Ä tännchen is all you need.

#wortspiel #llm

Please stop with the “do LLMs have fee-fees?” bullshit

This presupposes LLMs are alive which in turn means that for every prompt an LLM baby is born and after answering is snuffed out, dying horribly

Like the whale in the Hitchhiker’s Guide

📣📣📣 LLM-powered coding mass-produces technical debt. 📣📣📣

The expectations around them are sky-high, but many organizations are falling behind because of them. 📉

WHY IT MATTERS? CTOs lament slowdowns and production issues traced to company-wide rollouts of LLM-powered coding assistants. The AI promise clashes with the reality of technical debt and security issues. 🐛

Read more:

Learning to Build Shapes by Extrusion

Thor Vestergaard Christiansen, Karran Pandey, Alba Reinders, Karan Singh, Morten Rieger Hannemose, J. Andreas B{\ae}rentzen

https://arxiv.org/abs/2601.22858 https://arxiv.org/pdf/2601.22858 https://arxiv.org/html/2601.22858

arXiv:2601.22858v1 Announce Type: new

Abstract: We introduce Text Encoded Extrusion (TEE), a text-based representation that expresses mesh construction as sequences of face extrusions rather than polygon lists, and a method for generating 3D meshes from TEE using a large language model (LLM). By learning extrusion sequences that assemble a mesh, similar to the way artists create meshes, our approach naturally supports arbitrary output face counts and produces manifold meshes by design, in contrast to recent transformer-based models. The learnt extrusion sequences can also be applied to existing meshes - enabling editing in addition to generation. To train our model, we decompose a library of quadrilateral meshes with non-self-intersecting face loops into constituent loops, which can be viewed as their building blocks, and finetune an LLM on the steps for reassembling the meshes by performing a sequence of extrusions. We demonstrate that our representation enables reconstruction, novel shape synthesis, and the addition of new features to existing meshes.

toXiv_bot_toot

The #Enclosure feedback loop or how LLMs sabotage existing programming practices by privatizing a public good:

“[…]something has been taken from the public. Not just the training data, but the public forums and practices that created this training data in the first place. […] LLM companies are now selling back to us something that used to be available for free.”

I just got #JobSpam from #xAI. Yes, I've replied. Yes, the reply repeated the word "#nazi" more than once.

#AI #LLM

The failed White House / GOP strategy on AI is to stop federal bills and try to stop state bills.

This resulted in two things the tech industry didn’t want:

1. Patchwork of state AI laws

2. Public backlash

Any AI LLM could have devised a better strategy

-- @tedlieu.bsky.social

Immer wieder merken: Du kannst #KI #LLM Modellen nicht trauen. Dieser Tage halluziniert, nein lügt #Perplexity vor sich hin! Ein Beispiel: Ich wollte einen

iTerm2 now lets an LLM view & drive a terminal?? That's a huge way to destroy trust. That's just as bad as letting an IDE or email app leak private information.

#llm #ai #enshitification #wtf

Hot take: TDD is easier with LLM-assist dev. You can tell the computer "write a failing test for XYZ" which is super helpful when you see a bug in the wild that doesn't have a test for it yet because if it did your bug wouldn't have made it past CI.

Discuss (I've done the above).

I saw a forum post today where a user asked a question and someone responded with "You should ask an LLM or ChatGPT" and goddamn we are absolutely cooked.

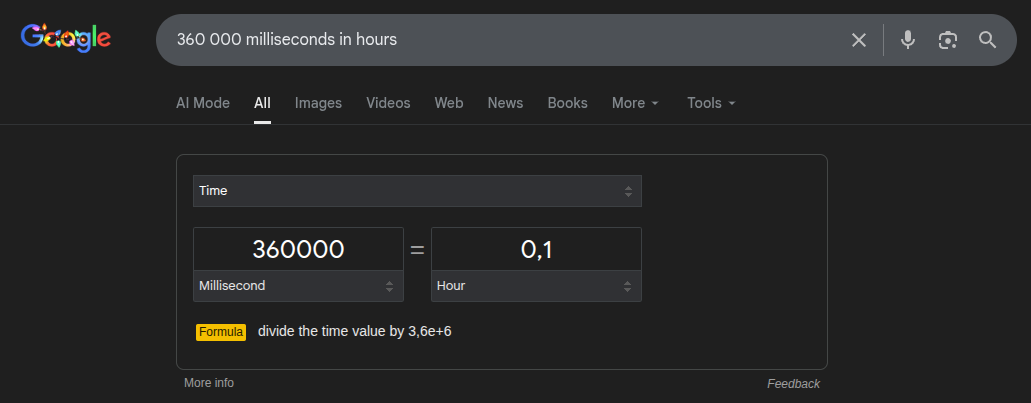

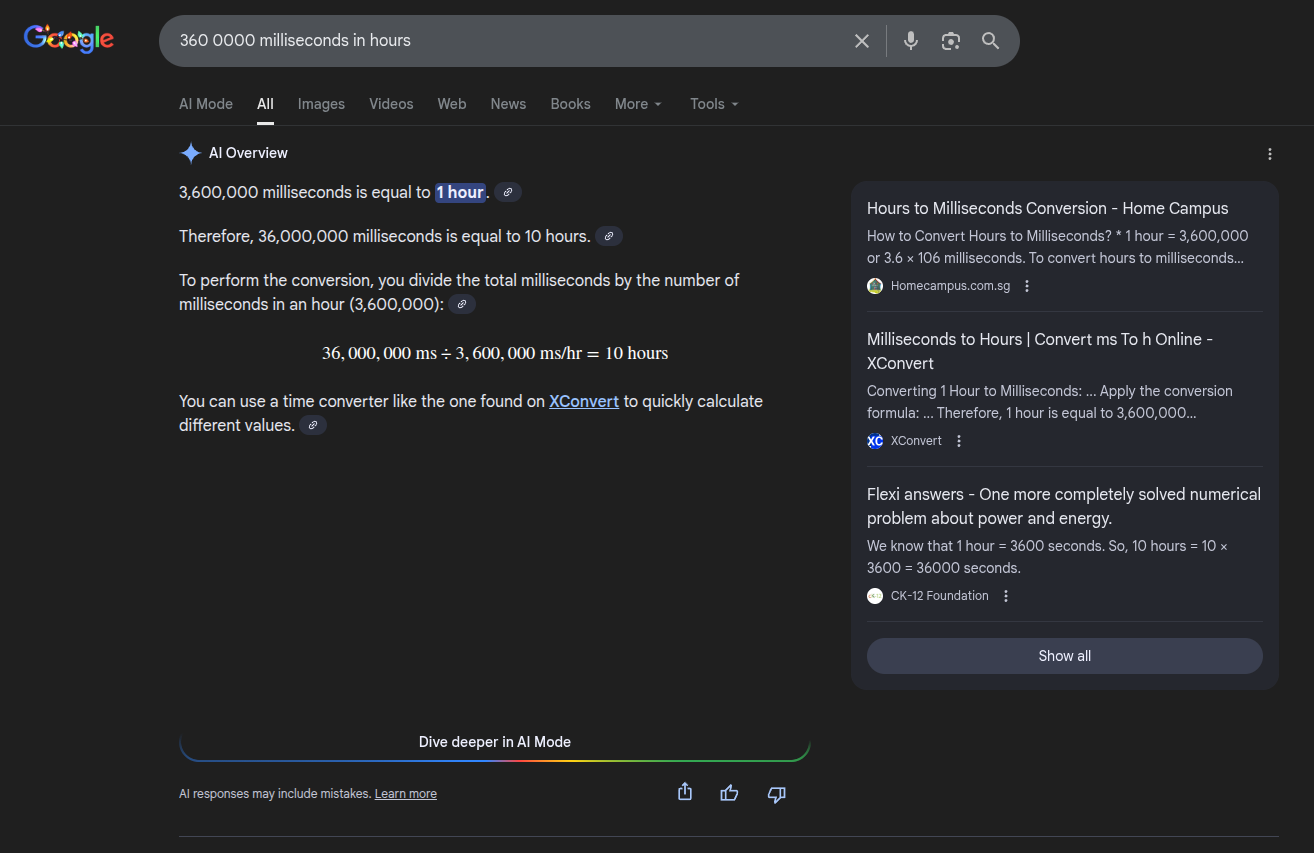

Really need to find a new search engine with @Google going all in on clanker slop for math. The difference of an additional zero:

#google #LLM #clankers

A bit under the radar, because I tend to look at technical updates (MangoWC!, Cosmic at the truly latest branch! etc), but credit where credit is due!

AerynOS:

- uses Zulip instead of Discord

- is looking at how to move to Codeberg, away from GitHub

- has a clear stance on LLM's

- tries to sponsor other open source projects

- at the same time continuously updating their platform and provided software.

Whilst going through all ups and downs of a startu…

🎃 Where Winds Meet Players Are Finding Clever And Sexy Ways To Screw With The Game’s AI NPCs

#games

Dear critics of LLM coding, there's tons to criticize.

However, if your argument is an analog to “Wine is terrible! I tasted a Four Loko, and it was awful!” you are in fact making bad arguments and should stop.

It’s super interesting how human-like llm models are «this seems hard, I’ll postpone it and pick a easier task» is such a common output

Some semi-related thoughts here on how slop in business long predates the LLM craze:

https://hachyderm.io/@inthehands/113603661215753448

Must be kind of challenging to write such an article without mentioning that #Apple offers exactly that for a few years now …

Your Laptop Isn’t Ready for #LLM s. Yet... https://

Do LLMs make *anything* better? But they seem like the ultimate genie that we now can't put back in the bottle.

#LLM

RE: https://mastodon.social/@borkdude/115799677210560924

Couldn't agree more with what Rich Hickey (creator of Clojure) says here in response to one of those sycophantic "Thank you" emails generated by Claude LLM and sent to various people i…

One of my contractors used an #LLM for a first draft of a client deliverable today.

I don’t allow #AI use for any client-facing work (and try to prohibit it for internal work too, but it’s been… tricky). This person knows it - I’ve worked with them for years, and they are usually very thoughtful and wouldn’t take a shortcut like this. So seeing the obvious AI copy was very confusing.

Thankfully we had a call scheduled this morning, so I decided to handle it there.

The contractor showed up very obviously sick, they told me they took most of past week off but had to force themselves to finish this draft because the deadline is EOD. I asked if they used #AI to draft the document, they immediately confessed and apologized.

Since the deliverable is client-facing, I asked them to explain their ideas to me in conversational language and then used my notes of what they said to help them rewrite the document. We worked through the whole thing in an hour, and I’ve asked them to go rest and recover.

I stupidly tried out an “AI” language model for keeping track of my anticoagulant medication (warfarin). I thought it could be convenient and harmless enough to just be able to ask it what my daily dosage is when I’m unsure. I realise how senseless that sounds now. I was tired. This is from the actual conversation I just had with it, with a few comments from me in square brackets:

Me: What's my dosage today?

LLM: Today is Tuesday, so your dosage is 2 pills.

Me: Wh…

US-based AI startup Arcee releases Trinity Large, a 400B-parameter open-weight model that it says compares to Meta's Llama 4 Maverick 400B on some benchmarks (Julie Bort/TechCrunch)

https://techcrunch.com/2026/01/28/tiny

I made the familiar point yesterday during a panel discussion that even if AI were approaching human intelligence, we're nowhere near human power efficiency (in "intelligence per watt")

But is that actually true anymore? We need huge power to train models, but once we have them trained, distilled and quantized, what do we have?

The human brain draws abt 20 Watts. An M4 doing local inference on a heavily quantized LLM won't draw more than 40 Watts.

New open weights model Kimi K2.5

"self-directed agent swarm paradigm" ,

"For complex tasks, Kimi K2.5 can self-direct an agent swarm with up to 100 sub-agents, executing parallel workflows across up to 1,500 tool calls. Compared with a single-agent setup, this reduces execution time by up to 4.5x. The agent swarm is automatically created and orchestrated by Kimi K2.5 without any predefined subagents or workflow."

'writing is more than just the process by which you obtain a piece of text, right? it's also about finding out what you wanted to say in the first place, and how you wanted to say it. this post existed in my head first as a thought, then it started to gel into words, and then i tried pulling those words out to arrange them in a way that (hopefully) gets my point across. ... i alone can get the thought out and writing is how i do that.'

I created a Persona/System Prompt that I use with a local LLM that I have affectionately named "TOSBot". It analyzes Terms of Service and Privacy Policies of software and web sites.

Too often, it returns these results, especially for AI-powered products and there is no recourse.

¯\_(ツ)_/¯

#ai #tos

The peoples:

The tech companies:

You asked for it and we listened! We added powerful AI everywhere!!! There is no opt-in or opt-out as we know you LOVE AI and wants it everywhere!!

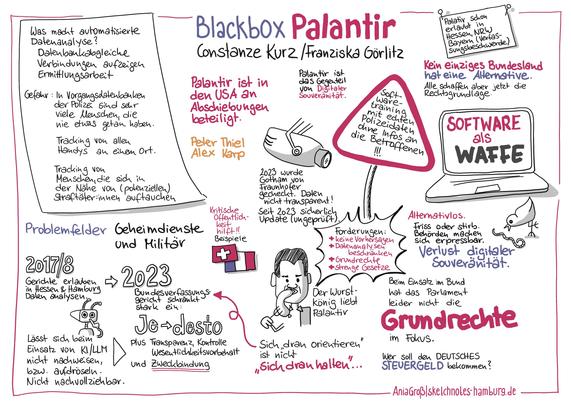

#ConstanzeKurz & #FranziskaGörlitz (kennt wer die Handles?) @ #39c3 über Palantir. Eine Datenanalyse und Zusammenführungssoftware muss nicht zwingend mit KI/LLM eigene Verdächtigungen he…

The more I learn about Agentic AI, the more it feels like LLMs behave similar to a next-gen "SQL" where Agents define the "source tables" that can be searched for specific information. The metadata discloses the purpose and content of each agent, so that the LLM can join it when appropriate.

Very flexible free form SQL, with very costly execution.

#ai

#ConstanzeKurz & #FranziskaGörlitz (kennt wer die Handles?) @ #39c3 über Palantir. Eine Datenanalyse und Zusammenführungssoftware muss nicht zwingend mit KI/LLM eigene Verdächtigungen he…

“The promise of AI for engineers is getting rid of engineers.

Tools like GitHub’s Copilot write code, specs, review, and create pull requests. They create and run tests, and do so in the background while the developers engage in more productive activities, such as meetings.”

From my latest blog on @…

I really feel bad for sysadmins who don’t have great English skills. There’s no way a world of LLM translator bots can ever get all the discussions amongst English-speaking sysadmins close enough to correct. Too much jargon and more importantly too much nuance in how words are used. I’ve spent a lot of time crafting technical prose, often finding it necessary to proofread and harmonize precisely how I say things. I doubt any LLM can be equivalently fastidious.

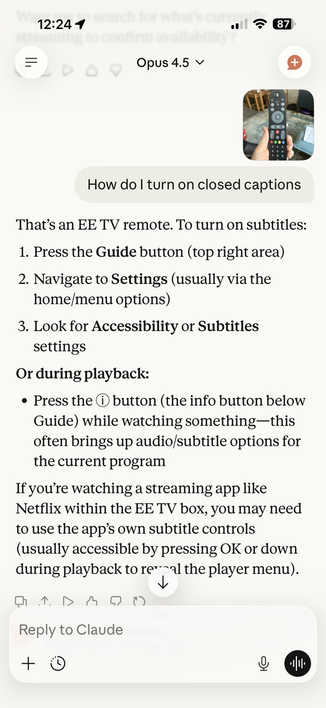

If I may, folks, if you’re trying to figure out remote control settings on your folks’ TVs, consider uploading a pic to your fave LLM to ask. Just worked like a charm for me.

I was just thinking about how the fact that #Musk named his AI "Grok" is evidence that he "reads sci-fi" in the same way he "plays video games." Like, he claims to do it but when it comes time to show the evidence it's clear he does not actually "grok" it.

Like... To grok something is to have a layer deeper than simply knowledge, but mathematically encoding statistical relationships between words is pretty obviously not even understanding much less qualifying as "groking" it. In the book, the ability to grok something is also the ability to annihilate that thing with a thought. Just pretending that an LLM actually *was* something that could become AGI (which it's not), this name would imply the AI would have the power to annihilate reality. That's bad. That's a bad name for an AI.

And why would a greedy fascist name something of his after something an anarchist communist space Jesus taught to the hippie cult he started? There are so many layers of facepalm to this. It's some kind of php-esque fractal of incompetence.

Like, there's no reason to talk about this but my brain does this to me sometimes and now it's your problem.

The absolutely weirdest software development shit I’ve read this year is feeding support requests directly into a LLM code generator.

I don't envy "vibe coders". I mean, let's for a minute assume that their vision is not a pipe dream, but an accurate prediction of the future.

For a start, what's their plan for life? Driving a tool whose primary selling point is that anyone can use it. And I'm not even talking about all the inside competition. I'm talking of people realizing that they can cut the middleperson and do the coding themselves.

The way I see it, vibe coders are a bit like typists (with no offense to typists). Their profession is a product of a novelty. And just like typists largely disappeared when typewriters and then computers became commonplace, so are vibe coders bound to disappear when vibe coding becomes commonplace.

And are true programmers going to become obsolete? Well, let me ask you: did the proliferation of cars and corresponding self-service skills render car mechanics obsolete? On the contrary. The way I see it, the proliferation of slopcode will only make competent programmers ever the more necessary.

What vibe coders are saying is basically this: "This new automated self-service kit makes car maintenance so easy. Car mechanics will become obsolete now. Everyone's just going to hire *me* to run this kit instead."

#AI #LLM #VibeCoding

KI im Blog? LLM nutzen für Recherche, Textkorrektur oder Bilder? Kein Ding – nur bitte mit Kennzeichnung. Wer’s versteckt, verkauft Illusion statt Inhalt. | #Couchblog 👀 #Blogs #KI

Browser makers' goal is to have an LLM use their AI browser to browse AI generated slop. Forever.

Lastly, whenever I do work with people through a specific case of them having felt like they needed to rely on an LLM, it often goes like this.

They feel guilty and ashamed.

They explain how impossible getting that task done felt with their time and energy constraints.

Yet when I talk them through other ways of solving the same problem, often we end up completing the work much quicker than it even took them to prompt the damn LLM to begin with.

And at the end, I have often seen relief - as if the person has forgotten that there are ways to work quickly while trusting their own brain, getting help in collaboration with another person rather than from a machine.

I do kind of love seeing someone realize that the AI they thought was saving them time actually caused more hassle and stress than it was worth. And that there’s a better way.

ByteDance launches an AI voice assistant powered by its Doubao LLM, available first on ZTE's Nubia M153 smartphone prototype; Doubao has an estimated 159M MAUs (Reuters)

https://www.reuters.com/world/china/bytedance-rolls-out-ai-…

I am not against AI. I am against technology built on copyright violation and sweatshop labor that is actively undermining our ability to save the planet from baking so that people can produce more propaganda and pollute the common well.

If the Venn Diagram seems like a circle, that's not my fault.

#AI #LLM

But now, LLMs offer a beautiful new promise: your brilliant notions can proceed full speed without any pesky underlings at all!

You can just •build the app• or •launch the campaign• or whatever, and nobody will tell you annoying things like “that won’t work” or “that timeline is unrealistic” or “that will create even larger problems” or “people will die.” The chat LLM is there to say YES to your hitherto-unacknowledged brilliance!

6/

Some 2025 takeaways in LLMs: reasoning as a signature feature, coding agents were useful, subscriptions hit $200/month, and Chinese open-weight models impressed (Simon Willison/Simon Willison's Weblog)

https://simonwillison.net/2025/Dec/31/the-year-in-llms/

RE: https://infosec.exchange/@cR0w/115616843117374981

This one is a bonus one is addition to "running an LLM on your code/machine is by definition a security hazard".

Okay, could someone point me to a good #NoAI / #NoLLM manifesto to link to?

Like, I've tried searching but apparently "NoAI" and "NoLLM" have already been claimed by LLM companies, and the queries with the f-word… okay, I should not have tried these.

2025 LLM Year in Review: shift toward RLVR, Claude Code emerged as the first convincing example of an LLM agent, Nano Banana was paradigm shifting, and more (Andrej Karpathy/karpathy)

https://karpathy.bearblog.dev/year-in-review-2025/

The new Ahoy is… boring. It sounds like an LLM generated script. :(

Zhipu AI launches a share sale to raise ~$560M in a Hong Kong IPO at a valuation of ~$6.6B, which would make it the first LLM developer listed in Hong Kong (Themis Qi/South China Morning Post)

https://www.sc…

Sources: Nvidia is in advanced talks to acquire Tel Aviv-based AI21, which is building its own LLMs, for $2B to $3B; the deal would resemble an acquihire (CTech)

https://www.calcalistech.com/ctechnews/article/rkbh00xnzl

An interview with Google DeepMind Nobel laureate John Jumper on the creative "off-label" uses for AlphaFold, combining AlphaFold's power with LLMs, and more (Will Douglas Heaven/MIT Technology Review)

https://www.technologyrev…

OpenAI and longtime US government contractor Leidos announce a partnership to roll out generative and agentic AI tools for specific missions at federal agencies (Miranda Nazzaro/FedScoop)

https://fedscoop.com/openai-chatgpt-le

Stanford researchers develop a web-based tool that uses an LLM to downrank X posts with antagonistic language in a user's feed, to reduce "partisan animosity" (Stanford University)

https://news.stanford.edu/stories/2025/11/social-m…

StepFun, a Chinese AI startup that develops LLMs and has partnered with automaker Geely and smartphone brands like Oppo and Honor, raised a ~$717M Series B (Eudora Wang/DealStreetAsia)

https://www.dealstreetasia.com/stories/stepfun-series-b-round-470495/

Financial stress from AI infrastructure spending, overhiring, and recession fears, rather than AI adoption, is likely driving layoffs in the tech sector (Fast Company)

https://www.fastcompany.com/91435192/chatgpt-llm-openai-jobs-amazon

A researcher details an LLM-based AI agent that "demonstrated a near-flawless ability" to bypass bot detection methods while answering online survey questions (Emanuel Maiberg/404 Media)

https://www.404media.co/a-researcher-made-