A Microsoft analysis of 37.5M Copilot conversations from January-September 2025 finds health-related topics dominated users' questions, and more (Megan Morrone/Axios)

https://www.axios.com/2025/12/10/microsoft-copilot-personal-productivity

"Selon l'analyse du cabinet [Oxford Economics], « les entreprises ne semblent pas remplacer leurs employés par l'IA Š grande échelle », ce qui suggère plutôt qu'elles pourraient utiliser cette technologie comme prétexte pour réduire leurs effectifs de manière systématique."

Michael Burry, Anthropic co-founder Jack Clark, and Dwarkesh Patel on the future of AI, whether AI tools improve productivity, job losses due to AI, and more (The Substack Post)

https://post.substack.com/p/the-ai-revolution-is-here-will-the

Amazon Kindle Scribe Colorsoft review: the $630 pen-enabled e-reader is a tough sell for most people, even with its big 11" display and productivity features (Samantha Kelly/Bloomberg)

https://www.bloomberg.com/news/features/20

Der Klimawandel ist real und bedroht die Ernährung im bevölkerungsreichsten Land der Erde, berichtet mein Kollege @… aus Indien.

"Die traditionellen Anbauflächen werden aufgrund des Salzgehalts und der Bodendegradation um 15-20% schrumpfen."

Geopolitical conflicts derail global fight against hunger amid climate threats, scienti…

If you're not a programmer—"lines of code written" is a completely absurd metric to measure productivity for software development.

It's like paying a cook by the amount of salt they use.

RE: https://cyberplace.social/@GossiTheDog/116011754710793421

Microsoft Research has similar studies. Using AI assistants hinders your acquisition of skills and is not even improving productivity. Surely we just have to do more of it, right?

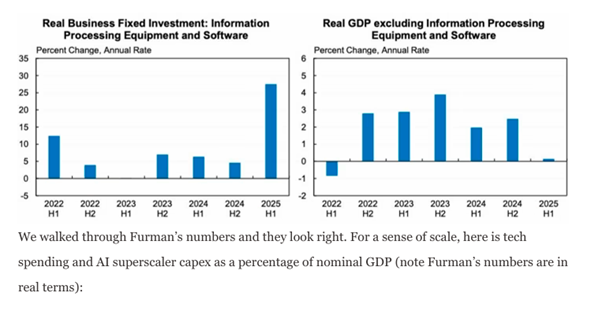

AI and the railway mania

Michael Roberts.

"A widely cited study from MIT found that so far, 95 per cent of generative AI projects produce no return in productivity growth or profits. To justify the required investment, annual data-centre revenues would need to rise from $20bn today to about $2trn. Existing revenues will fall short by $800bn.."

Some interesting figures from a new report by the Energy Transition Commission:

All electric cars are more energy efficient than plug-in hybrid and combustion engine vehicles, and weight is much less of a factor (in part due to regenerative braking).

https://www.

I applied the three finger salute to the two pieces of software that is not responsive after two hours. We used to have semi-decent software, but lately the piss-poor upgrades and AI SLOP has infected the "productivity" software. I could update 5 documents, generate PDF for each and rebuild the release folder index in one day or a day and a half if the VPN was slow. All I got done (day 2 of frustration) was three of the smallest documents, on the midsize document it choked and I…

The assumption is that one day large language models and other related AI technologies fostered by Google Gemini and OpenAI ChatGPT actually will be a great and infallible productivity tool for genuine work.

It already is decent for providing basic overviews of highly-covered, well-sourced topics, even as hallucinations and sycophancy continue to dog the tech, particularly in situations where accountability is more critical.

Despite today's downsides,

many companies ar…

A software engineer explains AI fatigue, compounded by a FOMO treadmill of using labs' latest tools, thinking atrophy, and more, alongside boosted productivity (Siddhant Khare)

https://siddhantkhare.com/writing/ai-fatigue-is-real

Wechsel auf Super Productivity – #opensourcesoftware

Studies showing that "AI" isn't creating the productivity gains that "AI" boosters promised are important but I think that they sadly don't help us win as much as some believe. We're winning at the wrong game:

https://tante.cc/2026/01/25/winning-the-wrong-g…

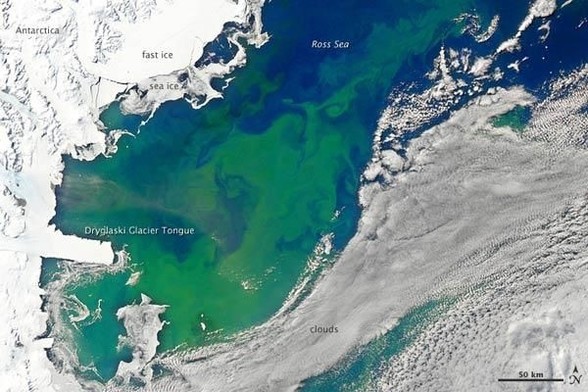

Weekend #Plankton Factoid 🦠🦐

In recent years it has been found that phytoplankton productivity can be fueled by deep-sea hydrothermal vents. These vents produce iron and dense microbial blooms which can locally stimulate algae blooms, but it wasn't clear how this linkage of ecosystems separated by kilometers was possible. Now, an Antarctic study suggests that earthquakes can cause violen…

If I had a penny for every time I heard something like

"We're going to track increases in productivity that we gain by adopting GenAI"

1. So you're assuming it's an increase

2. Against what control group

3. With no acknowledgement of confounding variables or experiment design

4. Around the...famously open problem of measuring software engineering productivity?

From the IMF

"the Canadian economy remains much less integrated than its global footprint would suggest. Goods, services, and workers face significant barriers when moving across provincial and territorial lines—a fragmentation that affects productivity, competitiveness, and overall resilience"

Most of the benefits would flow to the smaller provinces

#Canpoli

So, I’m sitting here in my default coffee shop, typing on my phone and wondering if I could actually use this for some kind of productivity.

I do have my iPad, which I bring with me occasionally, but not today.

All I would need is to bring a small keyboard with me, and my MagSafe pop out stand, and I’m pretty much sorted!

#iphone #WFH #coffeeshop

This can’t possibly be a unique observation, but generative AI boosters, if they’re not pedophiles, seem to be primarily engaging in productivity aesthetic, which is to say, lifestyle blogging their way through pointless busy work

New #VS2026 Insiders update brings fast scrolling, middle‑click scroll, HTML‑rich copy/paste, slimmer margins, colorized completions, partial‑accept suggestions, and streamlined Markdown preview. Small changes, big gains in flow. #visualstudio

Whenever you say "productivity", a kitten dies.

The #genAI *economic* bubble will burst sooner or later, as the technology is simply unable to deliver the promised productivity gains (and thus the promised ROI), so the AI companies are massively overvalued.

See, e.g., https://www.

The #genAI *economic* bubble will burst sooner or later, as the technology is simply unable to deliver the promised productivity gains (and thus the promised ROI), so the AI companies are massively overvalued.

See, e.g., https://www.

The #genAI *economic* bubble will burst sooner or later, as the technology is simply unable to deliver the promised productivity gains (and thus the promised ROI), so the AI companies are massively overvalued.

See, e.g., https://www.

"New #words – 26 January 2026" @ Cambridge dictionaries:

"FOBO" 'abbreviation for “fear of becoming obsolete”', "vibe revenue" 'money or funding that an #AI company is given because people are excited about the potential of AI, rather than because the com…

Goodbye Super Productivity – #superproductivity

#AI is old news. Innovators must ask themselves: in 2026, how can I supercharge my productivity by incorporating labubus into my process?

Diese Forderungen nach „mehr arbeiten“ und „mehr im Büro sein“ sind schon ein wenig gegensätzlich, oder?

The Meetings Will Continue

Until Productivity Improves

When articles talk about ‘productivity’ (“UK companies saw an average 11.5% productivity increase thanks to AI”) are they subjective (“I felt more productive”) or objective like “GDP per hour”? ‘cause 11% GDP growth would be amazeballs, and “email faster” less so…

https://www.

“The promise of AI for engineers is getting rid of engineers.

Tools like GitHub’s Copilot write code, specs, review, and create pull requests. They create and run tests, and do so in the background while the developers engage in more productive activities, such as meetings.”

From my latest blog on @…

Seeing a lot of people getting into tiled and scrolling window managers.

I actually like my desktop with overlapping windows and being a bit in a chaotic state and slightly different all the time.

My brain needs some variety and breaks from orderly processes, because I'm a human being and I've evolved to work best in semi-chaotic circumstances.

Obviously you do you but I'm considering this trend at least to some degree productivity wankery.

I think this brings me up to five separate Atlassian accounts now 🙃

Dear #Apple

"macOS Tahoe introduces a stunning new design, along with delightful ways to work across your devices and boost your productivity."

as Bob Dylan yelled on the stage in London.... "You are a Liar" I don't beleive you"

Evidence that AI is normal technology include AI systems that are good enough to be useful but not good enough to be trusted, continuing to require human oversight that limits productivity gains;

prompt injection and security vulnerabilities remain unsolved, constraining what agents can be trusted to do;

domain complexity continues to defeat generalization, and what works in coding doesn’t transfer to medicine, law, science;

regulatory and liability barriers prove high enou…

Anthropic's employees self-report using Claude in 60% of work and achieving a 50% productivity boost, often using it for debugging and code understanding, more (Anthropic)

https://anthropic.com/research/how-ai-is-transforming-work-at-anthropic/

"If “AI” is actually more expensive than paying actual people actual wages that’s still a good investment for capital because it is about breaking up the structures, networks and organizations that help workers organize and fight for labor standards and fairer wages."

(Original title: Winning the wrong game)

https://

Exploring Performance-Productivity Trade-offs in AMT Runtimes: A Task Bench Study of Itoyori, ItoyoriFBC, HPX, and MPI

Torben R. Lahnor, Mia Reitz, Jonas Posner, Patrick Diehl

https://arxiv.org/abs/2601.14608

Tackling complex tasks can feel like taming chaos, but classical music is a brilliant remedy. A well-composed symphony turns chaos into clarity. 🎻 Personally, it elevates my focus and productivity. Do you harness music to boost your performance? 🎶 Let's orchestrate success with the right rhythm!

We have an unhealthy relationship to productivity, especially in the West, and especially in societies based around and rooted in capitalism that say we should be constantly hustling. we should be constantly doing more, more, more. https://www.overcomecompulsivehoarding.co.

Went with Windows 98 w/ Office ‘97 on the ThinkPad, works great on it.

Toshiba will be Windows 95 & Office ‘95 and whatever weird Windows 95 productivity apps I can find.

https://hachyderm.io/@thomasfuchs/115578256800642377

📈 Build dashboards: Visualize input/output token usage, sessions & conversations, total costs in USD, terminal type distribution (#VSCode, Apple Terminal), requests per user & tool type usage (Read, Edit, LS, Bash)

🎯 Real insights: Measure ROI & productivity gains, spot performance bottlenecks & reliability issues, track adoption trends & user trust via accept/reject …

I just found a relatively new tool for syntax-aware diff/merge operations on git repositories ( #Mergiraf ).

Unlike other tools I've tried in the past, this one is also diff3-friendly  .

.

- https://mergiraf.org/

- https://lwn.net/Articles/1042355/

For the weirdos like me who prefer `rebase` over `merge` this can be a great mood & productivity booster

Tech startups have started to offer nicotine pouches as a free perk to employees, as some claim the products help them focus despite health hazards (Angel Au-Yeung/Wall Street Journal)

https://www.wsj.com/tech/tech-star…

📣📣📣 LLM-powered coding mass-produces technical debt. 📣📣📣

The expectations around them are sky-high, but many organizations are falling behind because of them. 📉

WHY IT MATTERS? CTOs lament slowdowns and production issues traced to company-wide rollouts of LLM-powered coding assistants. The AI promise clashes with the reality of technical debt and security issues. 🐛

Read more:

RE: https://tldr.nettime.org/@tante/115955906339726373

"AI" is not about productivity gains but about crushing labour.

Who would have guessed that appointing an android as Health Secretary would lead to a muddle?*

Wes Streeting accused of ‘chaotic and incoherent approach’ to NHS reform | Wes Streeting | The Guardian

https://www.theguardian.com/politics/2025/

Vor etwas mehr als sechs Jahren bin ich von Taskwarrior auf Todoist gewechselt.

Die Preiserhöhung bei Todoist hat bei mir einen Denkprozess angestossen und dafür gesorgt, dass ich nach Alternativen geschaut habe.

Nach intensiven Tests mit "Super Productivity"; "Vikunja" und "Logseq" zur Aufgabenverwaltung bin ich jetzt wieder zurück bei Taskwarrior.

Als Webfrontent (funktioniert im Querformat auch sehr gut auf dem Mobiltelefon) setze ich Taskw…

Dow plans to cut 4,500 staff to save costs and will rely on AI to boost productivity, resulting in $1.1B to $1.5B in charges; it currently employs ~34,000 staff (Rob Curran/Wall Street Journal)

https://www.wsj.com/business/earnings/dow-<…

The Meetings Will Continue

Until Productivity Improves!

#itsBeenOneOfThoseDays

Has someone calculated the loss of productivity when people get dozens of AI-powered spam calls a day

So, "#AI boosted your productivity"? Well, are you a software developer or a factory worker?

Productivity is a measure of predictable output from repetitive processes. It is how much shit your factory floor produces. Of course, once attempts to boost productivity start affecting the quality of your product, things get hairy…

"Productivity" makes no sense for creative work. It makes zero sense for software developers. If your work is defined by productivity, then it makes no sense to use as #LLM to improve it. You can be replaced entirely.

Artists get that. The fact that many software developers don't suggests that the trade took a wrong turn at some point.

Inspired by #NoAI

Can’t wait for the unquestionably super annoying in-app upsells in the free versions of Apple’s productivity apps

https://www.apple.com/newsroom/2026/01/introducing-apple-creator-studio-an-inspiring-collection-of-creative-apps/

AI workplace agents startup Genspark raised a $275M Series B at a $1.25B valuation and says it hit $50M in annualized revenue after pivoting from AI search (Anna Tong/Forbes)

https://www.forbes.com/sites/annatong/2025/11/20/genspark…

Anthropic researchers say rich countries' higher AI use risks deepening economic disparities and widening living standard gaps, driven by productivity gains (Financial Times)

https://www.ft.com/content/3ad44e30-c738-4356-91fb-8bb2368685c4

Symbolic.ai, founded by ex-eBay CEO Devin Wenig and Ars Technica cofounder Jon Stokes, partners with News Corp to offer AI tools to WSJ, Barron's, and others (Ben Sherry/Inc)

https://www.inc.com/ben-sherry/this-ai-startup-just-lan…