Cynicism, "AI"

I've been pointed out the "Reflections on 2025" post by Samuel Albanie [1]. The author's writing style makes it quite a fun, I admit.

The first part, "The Compute Theory of Everything" is an optimistic piece on "#AI". Long story short, poor "AI researchers" have been struggling for years because of predominant misconception that "machines should have been powerful enough". Fortunately, now they can finally get their hands on the kind of power that used to be only available to supervillains, and all they have to do is forget about morals, agree that their research will be used to murder millions of people, and a few more millions will die as a side effect of the climate crisis. But I'm digressing.

The author is referring to an essay by Hans Moravec, "The Role of Raw Power in Intelligence" [2]. It's also quite an interesting read, starting with a chapter on how intelligence evolved independently at least four times. The key point inferred from that seems to be, that all we need is more computing power, and we'll eventually "brute-force" all AI-related problems (or die trying, I guess).

As a disclaimer, I have to say I'm not a biologist. Rather just a random guy who read a fair number of pieces on evolution. And I feel like the analogies brought here are misleading at best.

Firstly, there seems to be an assumption that evolution inexorably leads to higher "intelligence", with a certain implicit assumption on what intelligence is. Per that assumption, any animal that gets "brainier" will eventually become intelligent. However, this seems to be missing the point that both evolution and learning doesn't operate in a void.

Yes, many animals did attain a certain level of intelligence, but they attained it in a long chain of development, while solving specific problems, in specific bodies, in specific environments. I don't think that you can just stuff more brains into a random animal, and expect it to attain human intelligence; and the same goes for a computer — you can't expect that given more power, algorithms will eventually converge on human-like intelligence.

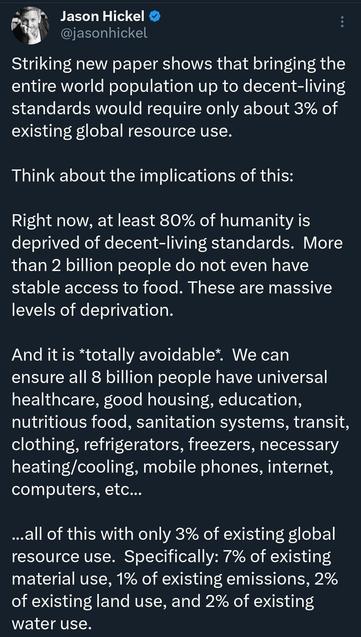

Secondly, and perhaps more importantly, what evolution did succeed at first is achieving neural networks that are far more energy efficient than whatever computers are doing today. Even if indeed "computing power" paved the way for intelligence, what came first is extremely efficient "hardware". Nowadays, human seem to be skipping that part. Optimizing is hard, so why bother with it? We can afford bigger data centers, we can afford to waste more energy, we can afford to deprive people of drinking water, so let's just skip to the easy part!

And on top of that, we're trying to squash hundreds of millions of years of evolution into… a decade, perhaps? What could possibly go wrong?

[1] #NoAI #NoLLM #LLM